Multimodal AI: Reshaping Search and Discovery in Retail and Travel

As we reach the midpoint of 2024, it's an ideal time to reflect on emerging trends that have shaped our perspectives. For me, it's Multimodal Large Language Models (MLLMs).

2023 was a game-changer for AI, no thanks to ChatGPT. We saw a surge in large language models (LLMs) and generative AI, which made everything from chatting with bots to getting content way faster and better.

I won't lie—I wasn’t very fond of the AI hype. Seeing everyone generate low-quality stock images for their posts and slides, and being wowed by trivial advancements, was honestly quite frustrating.

While the generative AI hype still prevails, I do have to admit, it is maturing. Slowly.

These advancements and the growing consumer adoption of AI technology have paved the way for what we’re seeing in 2024: the emergence of multimodal AI models (MLLMs).

‘Multimodality’ is a somewhat new term for an old concept, i.e. the way humans have always learned about things. People have always gathered information through various senses like sight, sound, and touch. Then, our brains merge these different types of ‘data’ to create our understanding of reality.

So basically, multimodal language models are advanced AI systems that can process and understand multiple types of data, like text, images, audio, and video, all at once. I know, shocking right?

Ironically, many people have interacted with aspects of multimodal AI without even realizing it.

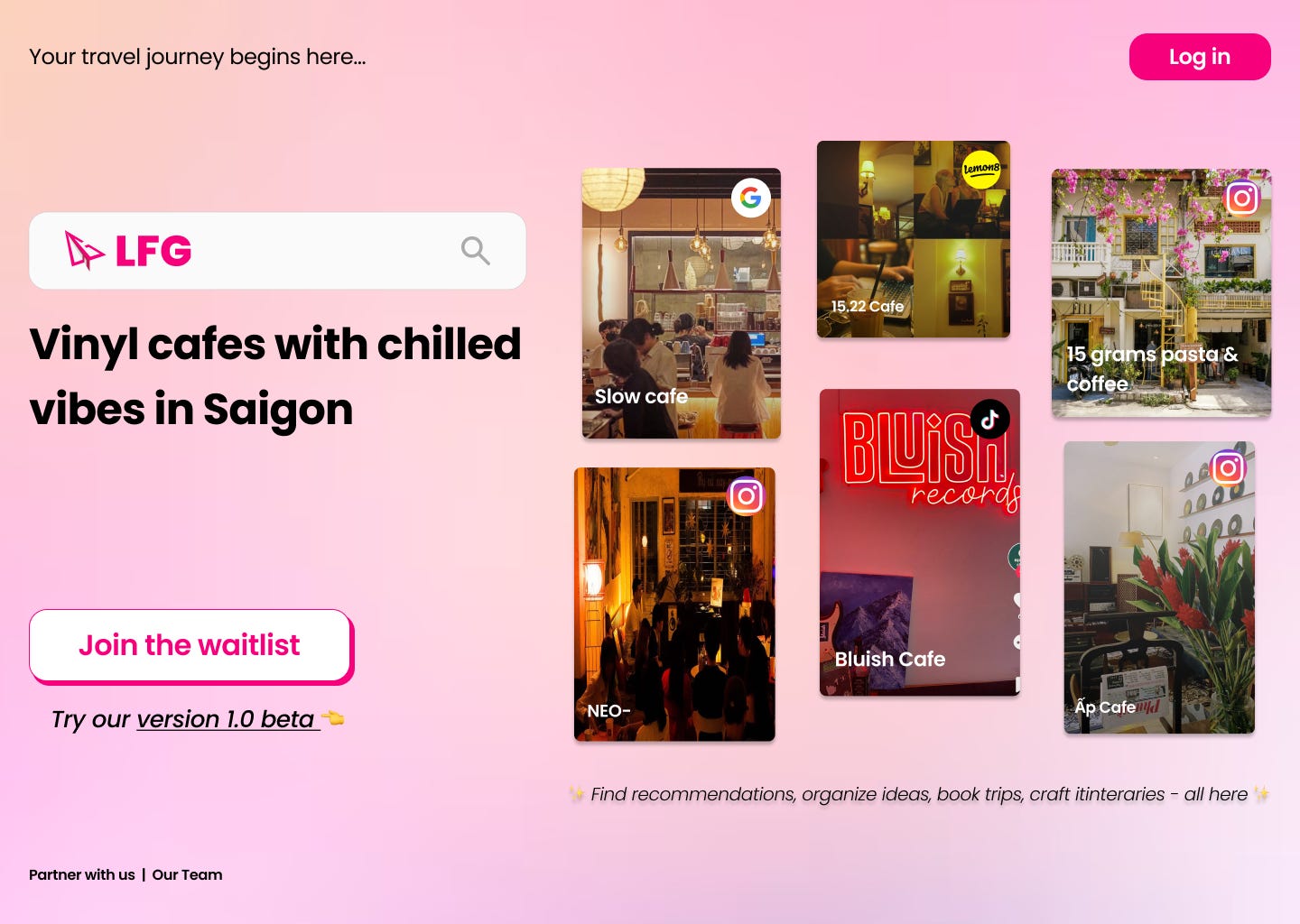

I didn't even realize I was building my startup, LFG, around this technology and its concept. Learning about multimodal AI has completely shifted my views on its implications and potential.

The Rise of Visual Commerce in Retail

(Emerging trend that have stood out for me this year)

So far, the exciting trend I’ve seen in 2024 is the rise of visual commerce, especially in the fashion and beauty sector. Multimodal AI is making waves here by enabling consumers to use natural language, images and videos for searching and buying — transforming how we shop for clothes, accessories, and beauty products.

In the US, startups focusing on multimodal search have received significant funding and support, like Daydream (US$50M seed funding) and Lumona (YCW24), underscoring the growing importance of this technology.

With ViSenze (a Singapore tech company at the forefront of multi-search), for example, you can upload a photo of a dress you love (even from a social media post), and their AI-powered search will find similar styles available for purchase. This makes shopping more engaging and personalized, and it’s clear that visual content is becoming a major player in retail decisions.

The Shift Towards Personalized Travel Experiences

(Insights gained about the direction of the travel industry)

While this technology is being experimented and refined in the fashion and beauty sectors, I believe its potential impact on the travel industry is even more profound. Travel encompasses a whole range of services and experiences, from flights and hotels to tours and local attractions. You can already see how big this sector is on its own.

There is a shift in consumer mindsets that personalization is no longer optional—it’s essential. Multimodal AI can simplify and personalize these offerings by analyzing a combination of text, images, and videos, making it easier for travelers to discover exactly what they're looking for and enhancing their overall experience.

For instance, a traveler searching for "hidden, speakeasy late bars in Kuala Lumpur" can benefit from an AI that not only processes the textual description but also analyzes images and videos to find the perfect match. This leads to more precise and personalized recommendations, enhancing user satisfaction.

Some further implications of multimodal AI for travel, that I’m eager to build and see developed, include:

Dynamic pricing: Adjusting prices and offers in real-time based on market trends and user behavior, maximizing revenue and satisfaction.

Streamlined bookings: Understanding natural language queries and provides instant booking assistance and results, improving user experience.

Smart assistants: Offering real-time support with voice commands, travel document and location analysis, and instant translations, making travel easier and more enjoyable.

Embracing Multimodal AI for Future Growth

(Lesson learned that will shape my approach for the rest of 2024)

As multimodal AI continues to advance, it will undoubtedly shape the future of any form of commerce, driving growth and enhancing the overall travel and shopping experience for consumers worldwide.

For travel startups like ours, we’ll definitely be exploring and leveraging these multimodal applications to redefine how travelers search, discover and experience the world — bringing a more intuitive and enjoyable journey before the trip begins.

Thanks for reading,

D. Han